I have breakfast most mornings at a local diner which can be a little noisy. Shouted orders, bussing tables, lots of conversation. What you’d expect from a busy diner.

As I do every morning, I inserted my AirPods to listen/watch a video… and the room became dead silent. I don’t know how else to describe it. For a split second I thought something might be wrong with my hearing but when I removed my AirPods all the normal sounds came flooding back.

Let’s jump back a couple of weeks to when I got my new AirPods 4 (with Adaptive Noise feature). I played with that for a minute or two then turned it off with the intention of experimenting later.

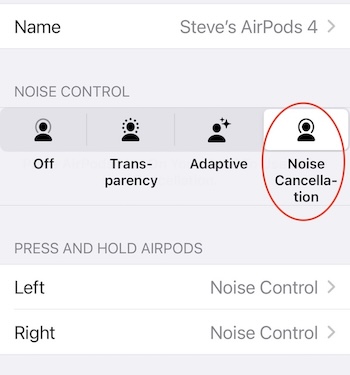

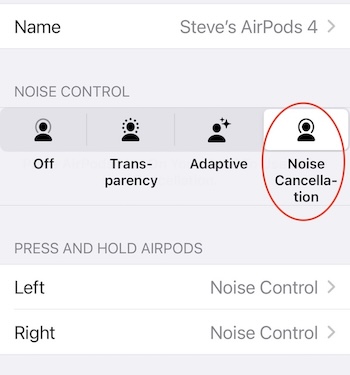

Apple pushed an update to iOS a couple of days ago and I’m guessing the “noise control” feature got reset. Here are the four settings:

1. Off: This disables any noise control, meaning you won’t get any additional noise isolation or transparency. You hear everything around you naturally.

2. Transparency: This setting allows external sounds to pass through so you can hear what’s happening around you while still listening to audio. It’s useful for staying aware of your environment.

3. Adaptive: This new setting automatically adjusts the level of noise cancellation and transparency in response to your surroundings. It tailors the experience based on the noise levels and movements around you.

4. Noise Cancellation: This mode blocks out external sounds by using microphones to pick up ambient noise and counter it with anti-noise signals. It’s great for immersive listening in noisy environments.

I wear ear protectors when I shooting a gun, and I’ve stuck little rubber plugs in my ears when trying to sleep in a noisy hotel room. And I’ve recently started wearing big over-the-ears protectors when driving the Land Rover. But I’ve experienced this kind of near-silence. Almost eerie.