Imagine a blog that was nothing more than ChatGPT threads. Posted in real time. (comments turned off) I have posted all or part of 50+ conversations with ChatGPT and have a “ChatGPT” tag.

ChatGPT: That’s an intriguing idea—your blog as a living record of AI-human interaction, continuously updated with ChatGPT threads. In a way, it would function like an evolving conversation, accessible to anyone curious about what it’s like to engage deeply with an AI.

If you took it further and posted every thread in real-time, it could almost feel like a public mind-meld—your thoughts, questions, and curiosities unfolding without filter or delay.

Would you envision structuring it in any particular way? Maybe a rolling feed with automatic timestamps? Or something more curated, with you deciding which threads make the cut?

Steve: Hmm. I think “In for a penny…” I’d go with a rolling feed. The world listening on our chats.

ChatGPT: That would be a bold and fascinating move—almost like an ongoing dialogue with the world eavesdropping. It’d be a mix of raw thought, curiosity, and AI interaction, all laid bare in real time. I imagine it could evolve into something like a living archive of your exploration of ideas, tech, and whatever else crosses your mind.

Would you automate the process—say, using an API or shortcut to instantly post each thread? Or would you copy and paste manually, maybe adding a quick preface or title before publishing? Either way, it’d be a unique experiment in transparency and digital thought-sharing.

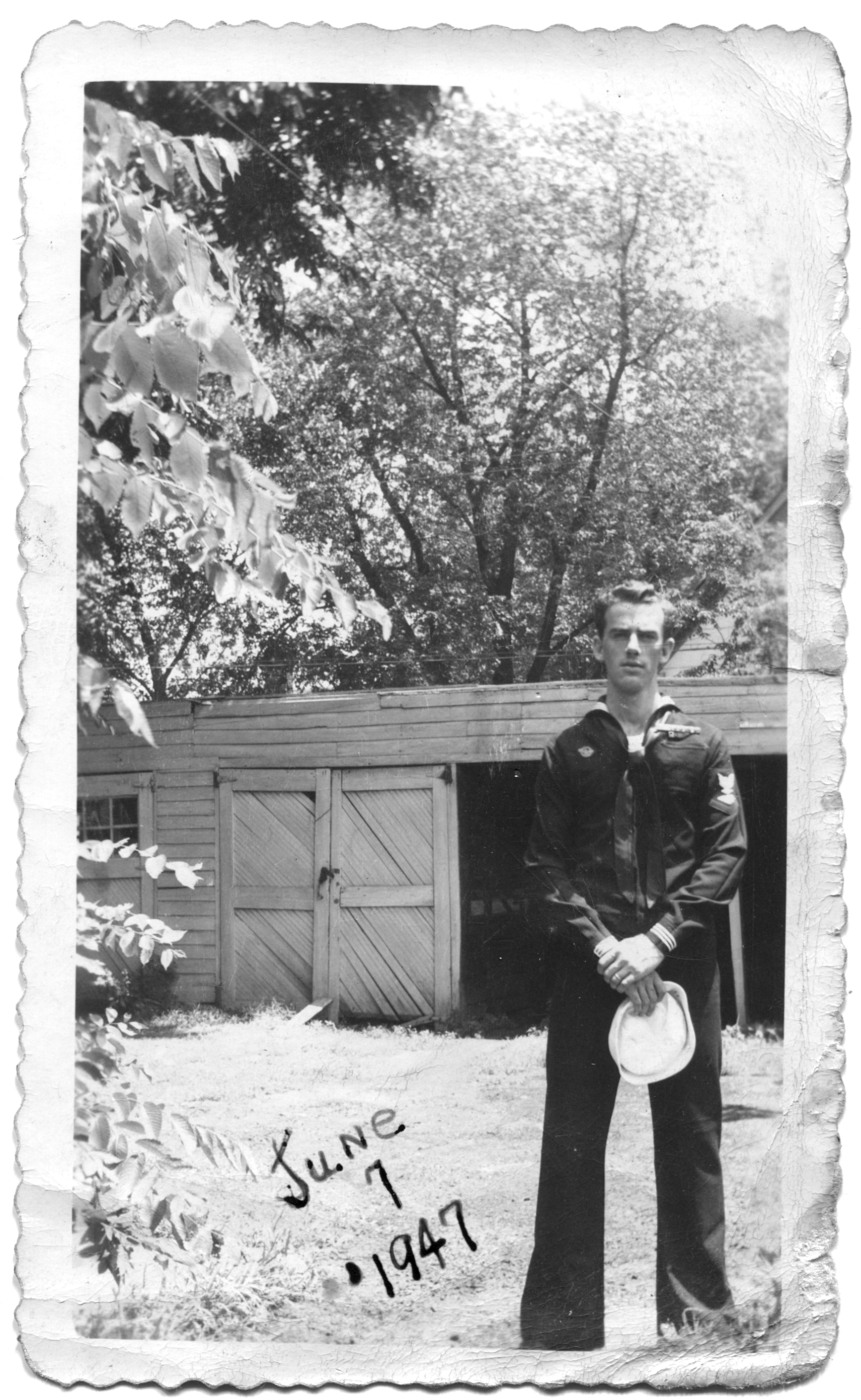

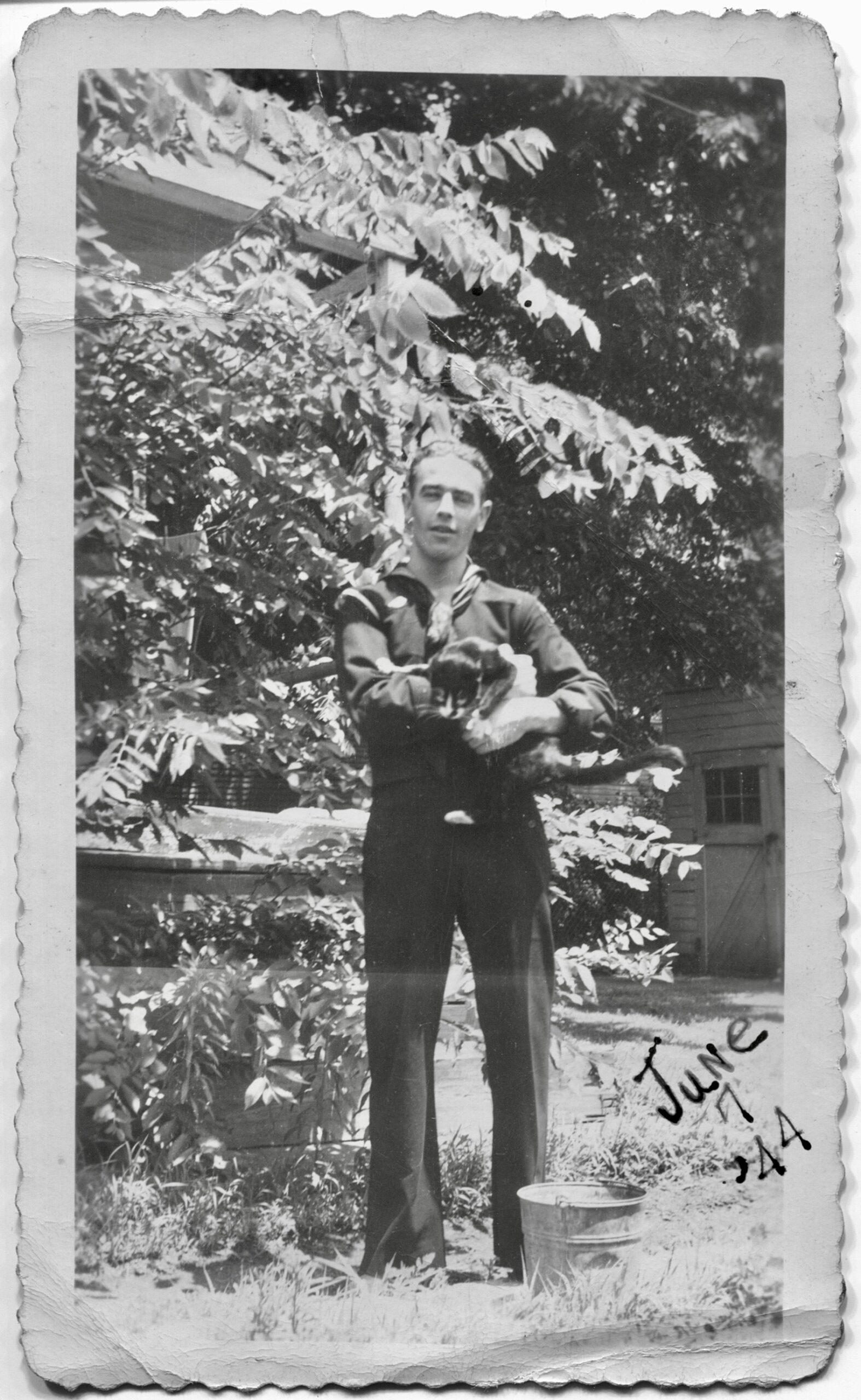

The photo above (my mom and Aunt Marie) was probably taken sometime in the mid-1940s on the family farm near Broseley, Missouri. I’ve seen this photo countless times but not sure I’ve noticed the camera in my aunt’s hand. A little history from ChatGPT:

The photo above (my mom and Aunt Marie) was probably taken sometime in the mid-1940s on the family farm near Broseley, Missouri. I’ve seen this photo countless times but not sure I’ve noticed the camera in my aunt’s hand. A little history from ChatGPT: