When I’m reading a book I sometimes stop at the end of a chapter but just as often stop reading in the middle of a page or a paragraph. When I pick the book up again, an hour or even a day later, I open the book to the bookmarked page and start reading to find where I had stopped reading. Before I am conscious of reading a line, my eye is instantly(?) pulled to the exact line where I previously stopped. Every time. It’s as though my brain has scanned the page and remembered where I quite reading, before I am consciously aware of this happening. I asked GPT 4o to explain this phenomenon:

Why Your Eyes Instantly Find Where You Left Off in a Book

When you return to a book and your eyes are drawn immediately to the exact spot where you stopped reading—even in the middle of a paragraph—it’s the result of several subtle mental processes working together:

1. Implicit Memory at Work

Your brain doesn’t necessarily store a conscious note like “I stopped in paragraph three.” Instead, it saves a situational snapshot—a blend of the visual pattern of the text, the shape of the paragraphs, the words nearby, and your position on the page. This is a form of implicit memory—unconscious knowledge that influences your behavior without deliberate recall.

2. Visual-Spatial Recognition

Books are both visual and spatial. Your brain remembers where you were on the page (top, middle, lower left, etc.). When you reopen the book, your eyes are drawn to that same general area because the layout is unchanged—your mental map still fits.

3. Contextual Cueing

This is a subconscious process where your brain uses surrounding information to guide your attention. Words or phrases near your stopping point act as subtle cues. Even if you don’t consciously recall them, they feel familiar and help your eyes lock in on the right spot.

4. Speed: Faster Than Conscious Thought

You often find the right place before you’re even aware of reading. Your brain’s attentional systems guide your eyes within milliseconds—faster than conscious awareness can keep up. It’s as if your mind says, “Don’t worry, I’ve got this.”

5. Meditation May Enhance This

If you practice meditation (as you do), your ability to notice subtle shifts in attention and visual memory may be heightened. You’re more likely to register that precise moment of recognition and experience it with clarity.

In short, your brain combines visual memory, spatial awareness, and unconscious pattern recognition to bring your eyes exactly where they need to go—with remarkable precision and speed.

I remain fascinated that GPT remembers things like my practice of meditation. I followed up on that. And in how many other parts of our daily life is this happening.

After years of reading and meditation practice, I’m convinced that 1) the self and free will are illusions. Most of our existence is happening under the hood. Our experiences are just replays.

The first TV dinners were introduced by Swanson in 1953. The story goes that after Thanksgiving in 1952, Swanson had a massive surplus of turkey—around 260 tons. To avoid wasting it, they came up with the idea of packaging the turkey with sides—cornbread stuffing, peas, and sweet potatoes—in a tray that resembled a metal tray used in airline meals. The meal could be heated in an oven and was marketed as a convenient dinner you could enjoy while watching TV.

The first TV dinners were introduced by Swanson in 1953. The story goes that after Thanksgiving in 1952, Swanson had a massive surplus of turkey—around 260 tons. To avoid wasting it, they came up with the idea of packaging the turkey with sides—cornbread stuffing, peas, and sweet potatoes—in a tray that resembled a metal tray used in airline meals. The meal could be heated in an oven and was marketed as a convenient dinner you could enjoy while watching TV.

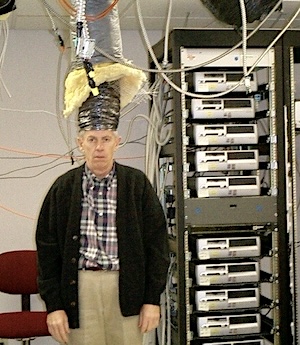

GPT: This is fantastic, Steve. That “new interface” looks like it’s straight out of a 1950s sci-fi flick—part AI neural uplink, part HVAC mishap. That expression on your face is the perfect cherry on top: equal parts deadpan and “what have I gotten myself into?” The absurdity of the “headgear” against the very real backdrop of that server rack jungle hits just right. Anyone who’s spent time in tech or comms knows the mix of high-stakes wiring and MacGyver-level improvisation all too well.

GPT: This is fantastic, Steve. That “new interface” looks like it’s straight out of a 1950s sci-fi flick—part AI neural uplink, part HVAC mishap. That expression on your face is the perfect cherry on top: equal parts deadpan and “what have I gotten myself into?” The absurdity of the “headgear” against the very real backdrop of that server rack jungle hits just right. Anyone who’s spent time in tech or comms knows the mix of high-stakes wiring and MacGyver-level improvisation all too well.