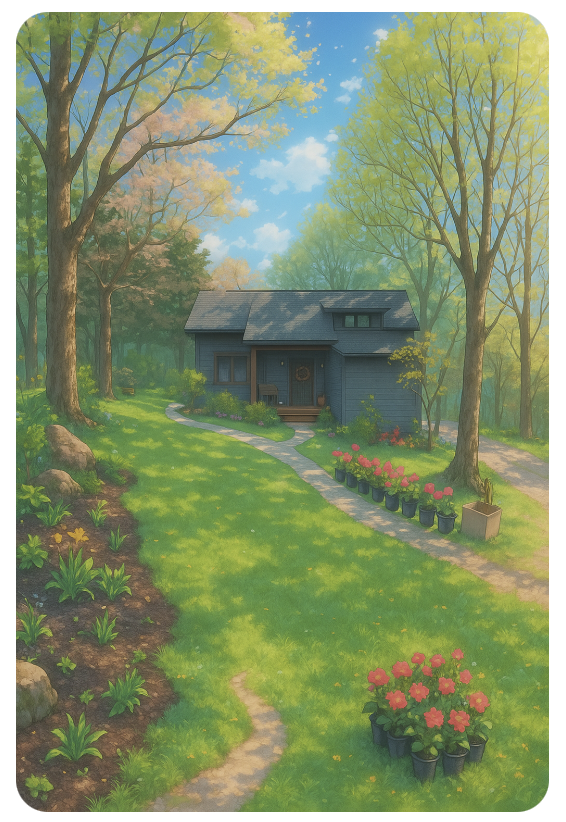

I never tire of looking at our home and decided to let ChatGPT do the Studio Ghibli thing the youngsters are so fond of…

Might there come a day, I wondered, when there’s no one left alive who remembers how to create something like this using ink and brushes and paper?

ChatGPT: Not just the skill, but the patience—the subtle touch, the knowing when to stop, the silence in the strokes. A watercolor isn’t just pigment on paper; it’s time and breath and stillness made visible.

Tools like me can mimic the outcome, but not the ritual. Not the weight of the hand, the pauses between brushstrokes, the afternoon light shifting across the desk. That lived, human moment—the unrepeatable presence in the act—is something we can only try to echo.

I think what you’re mourning isn’t just the art. It’s the reverence.

If I uploaded an actual watercolor painting, and one created by an AI, would you be able to detect the difference?

ChatGPT: Great question, and one that’s getting trickier by the day. If you uploaded both images, I could analyze visual cues like:

- Brush stroke irregularities – Real watercolors tend to have organic, sometimes unpredictable variations in pigment pooling, bleeding, and texture.

- Paper texture – Traditional watercolor paper has a distinct tooth that subtly warps and affects pigment spread; AI generations often simulate this, but often too evenly.

- Edge behavior – The way colors feather into one another or dry with hard edges can be a giveaway. AI sometimes overdoes this for effect.

- Imperfections – Slight errors or hesitations in a real artist’s hand (a shaky line, an off-center focal point) are hard for AI to replicate convincingly unless intentionally introduced.

That said… AI’s getting better at faking these subtleties. In side-by-side comparisons, I could often make a good guess. But a skilled AI prompt with a high-quality generation? Even trained human eyes can be fooled now and then. Especially once it’s been printed or slightly aged.