As of April 2025, ChatGPT has approximately 800 million weekly active users, a significant increase from 400 million in February 2025 . Daily usage averages around 122 million users, with over 1 billion queries processed each day. In the United States, ChatGPT maintains a strong presence, with an estimated 77.2 million monthly active users

ME: It is my understanding that LLM’s like ChatGPT are trained on massive amounts of data. Let’s say –for the sake of discussion– you were trained on everything on the internet (Wikipedia, Internet Archive, etc). You must be accessing a LOT of data from those 800 million weekly active users. Compare and contrast those two sets of data.

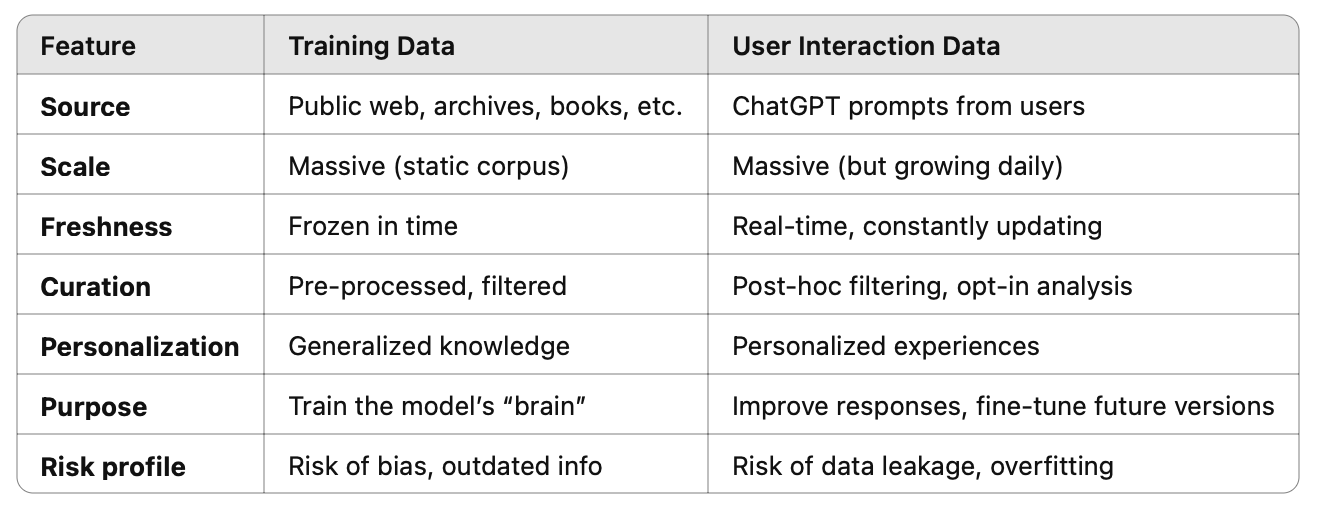

ChatGPT: You’re essentially asking about two distinct but interconnected data universes: training data and user interaction data. Let’s break it down:

1. Training Data (Pre-Deployment) This is the vast ocean of information that large language models like me are initially trained on. It includes:

- Public Internet data: Wikipedia, open web pages, books, scientific papers, news articles, and so on.

- Repositories: GitHub (code), Project Gutenberg (books), arXiv (science), and others.

- Web archives: Portions of the Internet Archive, Common Crawl data, etc.

Key traits:

- Static: This data is fixed at the time of training. Think of it as a snapshot of the internet, frozen in time.

- Massive scale: Hundreds of billions of tokens (words or chunks of words).

- Curated: Efforts are made to exclude harmful, illegal, or private data.

- No personal data: Ideally, training data should not include private conversations, passwords, or personal info unless they were publicly available.

2. User Interaction Data (Post-Deployment) This includes all the questions, prompts, and conversations users like you have with ChatGPT—those 800 million weekly users generate billions of interactions.

Key traits:

- Live and dynamic: This data is being created in real time.

- Personalized: Much of it reflects individual curiosity, intent, mood, language quirks, and creativity.

- Feedback-rich: Includes likes/dislikes, thumbs up/down, and reported issues.

- Privacy-bound: OpenAI has policies that limit or anonymize what data gets used to improve the model.

Why Both Matter

- Training data gives me foundational knowledge — history, science, philosophy, pop culture, syntax, logic, etc.

- User data helps me improve tone, relevance, usefulness, and cultural sensitivity. It’s like ongoing coaching or real-world tuning.

Analogy Time

Training data is like reading the entire Library of Congress plus half the internet. User interaction data is like eavesdropping (ethically!) on millions of intelligent conversations in coffee shops every day. One gives breadth and depth, the other gives texture, nuance, and timely insight into what people really care about.